In our post on the virtual print factory, we saw how an art director can be simulated and used in a simulated press check to optimize print production. There are other applications that can be revolutionized through the online simulation of production. Today, we have a look at the future of energy production and delivery. Society is improved by making it more efficient.

In 1852 Basel, the private company Gasindustrie was founded and in 1867 it was nationalized. Over the years other utilities were added: water delivery, water production, electricity, long-distance heating, refuse processing, and a fiber network for broadband internet and telephony. The name became IWB, forIndustrielle Werke Basel. It was privatized in 2010 (CEO David Thiel), but all shares belong to the Canton Basel-City. IWB is responsible for the supply of energy, water, and telecom; it has a mandate to optimize its operations (smart IWB 2020).

During industrialization, like in most countries, Switzerland's main energy source was coal. After World War I, not having coal mines, Switzerland boosted the education of engineers, who could then electrify the country. For example, the Crocodile locomotive was an engineering feat that could pull up a freight train on the Gottardo line. Actually, the regenerative braking energy from two trains could pull up one train on the other side of the Alps. When in the 1930s the regime in Germany started flexing its muscle and using its coal to wield power, Switzerland invested considerable brain power to wean away from coal as much as possible. For example, the cantonal buildings in Zurich are heated with heat pumps extracting heat from the Limmat.

The ETH cranked out generation after generation of skilled engineers who designed hydroelectric dams, turbines, and power distribution systems. Many plants were of the pump type, consisting of an upper and a lower reservoir: during the day water falls and generates power, while at night cheap electricity is imported from fixed throughput plants to pump the water back up.

This history is reflected in IWB's energy sources. In 2015, the energy sources for electricity in percent were

| hydroelectric | 96.14 |

| wind | 0.22 |

| solar | 0.14 |

| other renewable | 3.50 |

In the 4th quarter of 2015, on the European domestic market, the cost of a kilowatt-hour (kWh) of power was 3.3 cents. However, in Basel, at the public car charge boxes, the consumer price varies between 45 and 70 cents per kWh. This is an opportunity to increase efficiency. Smart IWB 2020 aims at reducing and stabilizing the end-user energy costs.

The old big central power plants will remain and keep producing and storing energy. New small decentralized systems have been built to produce and store energy at the regional level. Now, end-users are starting to produce, store, and consume energy. Excess energy is shared at the neighborhood level.

This is how a 1 MW lithium-metal-oxide battery in Zurich looks like:

End-users must also store energy in some form to even out the network dependence during the day. There may be excess solar energy in the afternoon and a lack of energy during a cold winter night.

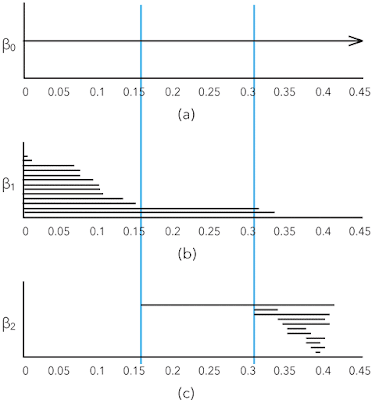

This is made possible by the new control network shown in dark gray in the figure below (for an animation see here). IWB can collect data everywhere on the network and feed it to its simulation that allows it to optimize the overall energy generation and conversion system.

Electricity companies have been using simulations for many years. For robustness, a distribution system cannot have a tree topology, because a failure at a node will black out the entire subtree. The required mesh topology is difficult to manage because the system has to be kept in equilibrium, otherwise, a failure will cascade to a blackout of the entire network.

What is new with smart IWB 2020, is that the regulation is no longer made by dropping more water when the network frequency drops under 49.8 Hz and by pumping up water when the frequency rises over 50.2 HZ. As the figure above shows, there are many more sources for electricity that have to be synchronized and balanced out.

In 2000, Germany introduced a law to subsidize renewable energies by guaranteeing the producers a profit, i.e., by taking out a major part of their risk to conduct business. In 2004, the European Union liberalized the power market, adding to the mix the windmill farms in Denmark among others. In Germany alone, renewable energy production surged from 6,277 GWh in 2000 to 153,000 GWh in 2015.

The availability of this low-cost renewable energy from the north wrecked havoc in the business model of the Swiss generators, who were generating expensive electricity during the day by draining the high reservoirs and importing cheap electricity at night to pump up the water from the low reservoirs. Today, solar plants in Germany deliver the maximum energy around noon, exactly the time when pump plants in the Alps used to generate the highest profits.

According to Alpiq CEO Jasmin Staiblin (SFR 25 April 2016), the producer Alpiq can generate only ¼ of its hydropower at a profit, while ½ breaks even and ¼ is sold at a loss. On average, to Alpiq, hydropower generation costs 6.5 cents per kWh, twice the European market price. Even at its newest generation facilities with the latest turbine designs, the cost is 3.8 respectively 4.5 cents per kWh. Alpiq expects that next year or the year after, the open market price will sink to 2 cents per kWh or even slightly less.

The numerous nuclear power plants in France and Switzerland, while also causing losses to the hydroelectric generators, cannot compete with the renewable sources. At the Gösgen nuclear power plant, production costs in 2014 were 3.4 cents per kWh. In 2015 they were 5.1 cents per kWh, but this was due to accounting changes and costs are supposed to sink again, but 3.4 > 2. According to GE Chief Productivity Officer Philippe Cochet in Fairfield CT (NZZ 13 January 2016), before the 2008 financial crisis, in Europe each year 7 GW of new power generation capacity was sold; after the crisis, sales dropped to 1.3 GW and in the past two years sales were less than 1 GW.

The solution is to use online simulations to not just optimize electric power generation, but all energy management: electricity, hot steam for electricity production, hot water for heating, and warm water for washing. Heat is produced by burning refuse, natural gas, biomass (wood refuse), etc. It is also recovered from data centers, instead of dispersing it in the atmosphere through air conditioning chillers. This photograph by Mathias Leemann shows the refuse burning plant of Basel.

Heat can be stored in water, soil, and stones, as has been done since Roman times. A more contemporary method used by IWB is the use of fuel cells. When there is excess electricity, electrolysis of water is used to generate hydrogen. Hydrogen is also produced from natural gas when consumption is low. This hydrogen is easy to store. When electricity prices are high, hydrogen fuel cells are used to generate electricity.

Coordinating and timing all these sources, stores, carriers, and consumers of energy is a very complex task. When IWB will sell its electricity at the public charge boxes (photograph by Simon Havlik) around the Canton for a much lower price than today's 45 to 70 cents per kWh, cars based on burning fossil fuels will disappear very fast. Such is the impact of smart energy management.

So far, we have seen how the online simulation of a complex energy provision system can considerably reduce the cost of energy. However, this does not yet help with the goal of the 2000 Watt Society. If we build our houses with recycled glass shards in the outer concrete walls, then use 12 cm of insulation and cover it with 16 cm of solid wood on the inside, and also give up private ownership of cars, we might achieve a 3500 Watt Society, said ZHAW sustainability expert Prof. Andreas Hofer (SRF 26 November 2015).

50 years ago, people got by with less than 2000 Watt. Where is the problem? It is not at the individual level but at the society level. We have become much more mobile: if you live in Lugano, you not longer go to San Moritz for an extended weekend, but to Paris. Also, we have become digital packrats. All over the world, we have huge server farms that store all that digital media we never consume but is valuable for social network companies to dissect our lives and sell us stuff we do not really need.

Back to the virtual print factory:

The output of the prepress stage is a PDF file. The two presses take raster images, therefore the computer in front of the press has to do the ripping and is called the digital front-end. In John L. Recker et al.; Font rendering on a GPU-based raster image processor; Proc. SPIE 7528 (January 18, 2010), the authors calculated that over a year of usage, the regular front-end RIP consumed 38,723 kWh and generated 23,234 Kg of CO2, while for the GPU-RIP they built, the corresponding numbers are 10,804 kWh and 6,483 Kg.

This is the kind of innovation that is required to achieve the 2000 Watt Society at the society level rather than at the individual level. There is still a lot of work to do. We recently wrote that the internet of things is a power guzzler: fortunately the cited report has some good advice.