A few years ago, when "big data" became a buzzword, I attended an event from a major IT vendor that was about the new trends in the sector. There were presentations on all the hot buzzwords, including a standing room only session on big data. After the obligate corporate title slide, came a slide with a gratuitous unrelated stock photo on the left side, while the right side was taken up by the term "big data" in a font size filling the entire right half of the slide. Unfortunately, the rest of the presentation only contained platitudes, without any actionable information. My takeaway was that "big data" is a new buzzword that is written in a big font size.

After the dust settled, big data became associated with the three characteristics data volume, velocity, and variety proposed in 2001 by META's Doug Laney as the characteristics of "3-d data management" (META is now part of Gartner). Indeed, today Gartner defines big data as high-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.

Volume indicates that the amount of data at rest is of petabyte scale, which requires horizontal scaling. Variety indicates that the data is from multiple domains or types: in a horizontal system, there are no vertical data silos. Velocity refers to the rate of flow; a large amount of data in motion introduces complications like latencies, load balancing, locking, etc.

In the meantime, other 'V' terms have been added, like variability and veracity. Variability refers to a change in velocity: a big data system has to be self-aware, dynamic, and adaptive. Veracity refers to the trustworthiness, applicability, noise, bias, abnormality and other quality properties in the data.

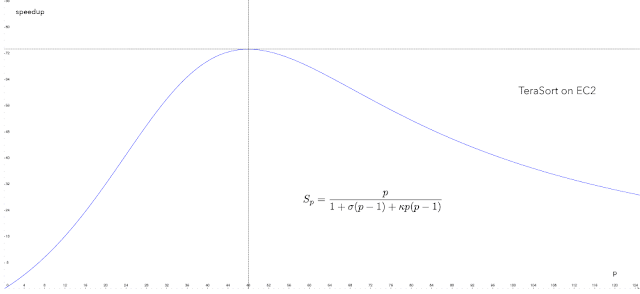

A recent understanding is the clear distinction between scale-up and scale-out systems with a powerful model called universal scalability model dissected in a CACM paper: N. J. Gunther, P. Puglia, and K. Tomasette. Hadoop superlinear scalability. Communications of the ACM, 58(4):46–55, April 2015.

This model allows us to state that Big Data refers to extensive data sets, primarily in the characteristics of volume, velocity, and/or variety, that requires a horizontally scalable architecture for efficient storage, manipulation, and analysis, i.e., for extracting value.

To make this definition actionable, we need an additional concept. Big Data Engineering refers to the storage and data manipulation technologies that leverage a collection of horizontally coupled resources to achieve nearly linear scalability in performance. Now we can drill down a little.

New engineering techniques in the data layer have been driven by the growing prominence of data types that cannot be handled efficiently in a traditional relational model. The need for scalable access to structured and unstructured data has led to software built on name–value / key–value pairs or columnar (big table), document-oriented, and graph (including triple–store) paradigms. A triple-store or RDF store is a purpose-built database for the storage and retrieval of triples through semantic queries. A triple is a data entity composed of subject-predicate-object, like "Bob is 35" or "Bob knows Fred."

Due to the velocity, it is usually not possible to structure the data when it is acquired (schema-on-write). Instead, data is often stored in raw form. Lazy evaluation is used to cleanse and index data as it is being queried from the repository (schema-on-read). This point is critical to understand because to run efficiently, analytics requires the data to be structured.

End-to-end data lifecycle categorizes the steps as collection, preparation, analysis, and action. In a traditional system, the data is stored in persistent storage after it has been munged (extract, transform, load, followed by cleansing; a.k.a. wrangling or shaping). In traditional use cases, the data is prepared and analyzed for alerting: schema-on-write. Only afterward the data or aggregates of the data are given persistent storage. This is different from high-velocity use cases, where the data is often stored raw in persistent storage: schema-on-read.

Veracity refers to the trustworthiness, applicability, noise, bias, abnormality and other quality properties in the data. Current technologies cannot assess, understand, exploit, and understand veracity throughout the data lifecycle. This is a big data characteristic that presents many opportunities for disruptive products.

Because in big data systems IO bandwidth is often the limiting resource, yet processor chips can have idle cores due to the IO gap (the number of cores on a chip is increasing, while the number of pins is constant), big data engineering seeks to embed some local programs like filtering, parsing, indexing, and transcoding in the storage nodes. This is only possible when the analytics and discovery systems are tightly integrated with the storage system. The analytics programs must be horizontal not only in that they process the data in parallel, but operations that access localized data, when possible, are distributed to separate cores in storage nodes, depending on the IO gap size.

With this background, we can attempt a formal definition of big data:

Big Data is a data set(s), with characteristics (e.g., volume, velocity, variety, variability, veracity) that for a particular problem domain at a given point in time cannot be efficiently processed using current / existing / established / traditional technologies and techniques in order to extract value.

Big data is a relative and not an absolute term. Big data essentially focusses on the self-referencing viewpoint that data is big because it requires scalable systems to handle, and architectures with better scaling have come about because of the need to handle big data.

The era of a trillion sensors is upon us. Traditional systems cannot extract value from the data they produce. This has stimulated Peaxy to invent new ways for scalable storage across a collection of horizontally coupled resources, and a distributed approach to querying and analytics. Often, the new data models for big data are lumped together as NoSQL, but we can classify them at a finer scale as big table, name–value, document, and graphical models, with the common implementation paradigm of intransigent distributed computing.

A key attribute of advanced analytics is the ability to correlate and fuse the data from many domains and types. In a traditional system, data munging and pre-analytics are used to extract features that allow integrating with other data through a relational model. In big data analytics, the wide range of data formats, structures, timescales, and semantics we want to integrate into an analysis presents a complexity challenge. The volume of the data can be so large that it cannot be moved to be integrated, at least in raw form. Solving this problem requires a dynamic, self-aware, adaptive storage system that is integrated with the analytics system and can optimize the locus of the various operations depending on the available IO gap and the network bandwidth at every instant.

In big data, we often need to process the data in real-time or at least near-real-time. Sensors are networked and can even be meshed, where the mesh can perform some recoding functions. The data rate can vary over orders of magnitude in very short time intervals. While in communications technologies, streaming technology has been perfected over many decades, in big data, the data flow and its variability are still largely unexplored territory. The data rate is not the only big data characteristic presenting variability challenges. Variability refers also to changes in format or structure, semantics, and quality.

In view of the recent world events—from Edward Snowden's leaks to Donald Trump's solicitation on Bill Gates to close the Internet—the privacy and security issues for big data are stringent but also in great flux due to the elimination of the Safe Harbor framework. According to a 2015 McKinsey study, cybersecurity is the greatest risk for Industry 4.0, with 50 billion € annual reported damage to the German manufacturing industry caused by cyberattacks.

With all these requirements, it is not surprising that there is only a handful of storage products that are both POSIX and HDFS compliant, are highly available, cybersecure, fully distributed and scalable, etc.