In the early days of digital imaging for office use, there was a religion that the response of the system had to be completely neutral. The motivation came from a military specification that a seventh generation copy had to still look good and be readable. If there would be a deviation from neutrality, every generation would enhance this deviation.

When a Japanese manufacturer entered the color copier market, they enhanced the images to improve memory colors and boost the overall contrast. They were not selling to the government and rightfully noted that commercial users do not do multiple generation copies.

Color is not a physical phenomenon, it is an illusion and color imaging is about predicting illusions. Visit your art gallery and look carefully at an original Rembrandt van Rijn painting. The perceived dynamic range is considerably larger than the gamut of the paints he used because he distorted the colors depending on their semantics. With Michel Eugène Chevreul's discovery of simultaneous contrast, the impressionists then went wild.

When years later I interviewed for a position in a prestigious lab, I gave a talk on how—based on my knowledge of the human visual system (HVS)—I could implement a number of algorithms that greatly enhance the images printed on consumer and office printers. The hiring engineering managers thought I was out of my mind and I did not get the job. For them, the holy grail was the perfect neutral transmission function.

This neutral color reproduction is a figment of imagination in the engineer's minds in the early days of digital color reproduction. The scientists who earlier invented the mechanical color reproduction did not have this hang-up. As Evans observed on page 599 of [R.M. Evans, “Visual Processes and Color Photography,” JOSA, Vol. 33, 11, pp. 579–614, November 1943], under the illuminator condition color constancy mechanisms in the human visual system (HVS) correct for improper color balance. As Evans further notes on page 596, we tend to remember colors rather than to look at them closely; for the most part, he notes, careful observation of stimuli is made only by trained observers. Evans concludes that it is seldom necessary to obtain an exact color reproduction of a scene to obtain a satisfying picture, although it is necessary that the reproduction shall not violate the principle that the scene could have thus appeared.

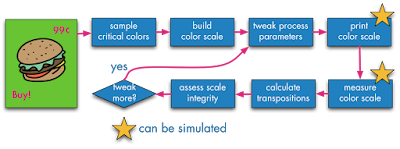

We can interpret Evans’ consistency principle (page 600) as what is important is the relation among the colors in a reproduced image, not their absolute colorimetry. A color reproduction system must preserve the integrity of the relation among the colors in the palette. In practice, this suggests that three conditions should be met. The first is that the order in a critical color scale should not have transpositions, the second is that a color should not cross a name boundary, the third is that the field of reproduction error vectors of all colors should be divergence-free. The intuition for the divergence condition is that no virtual light source is introduced, thus supporting color constancy mechanisms in the HVS.

We all know the Farnsworth-Munsell 100 hue test. The underlying idea is that when an observer has poor color discrimination, either due to a color vision deficiency or due to lack of practice, this observer will not be able to sort 100 specimen varying only in hue. In the test, the number of hue transpositions is counted to score an observer.

We can considerably reduce the complexity of a color reproduction system if we focus on the colors that actually are important in the specific images being reproduced. We can minimally choose the quality of the colorants, paper, halftoning algorithm, and the number of colorants to just preserve the consistency of that restricted palette.

First, we determine the palette, then we reproduce a color scale with a selection of the above parameters and we give the resulting color scale image a partial Farnsworth-Munsell hue test. This is the idea behind invention 9,355,339. The non-obvious step is how to select color scales and how to use a color management system to simulate a reproduction. The whole process is automated.

A graphic artist manually identifies the locus of the colors of interest, which is different for each application domain, like reproductions of cosmetics or denim clothes. A scale encompassing these critical colors is built and printed in the margin. In the physical application, the colors are measured and the transpositions are counted.

The more interesting case is a simulation. There is no printing, just the application of halftoning algorithms and color profiles. The system extracts the final color scale and identifies the transpositions compared to the original. Why is the simulation more important?

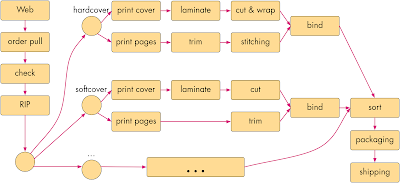

Commercial printing is a manufacturing process and workflow design is crucial to the financial viability of the factory. Printing is a mass-customization process, thus every day in the shop is different. This makes it impossible to assume a standard workload.

For each manufacturing task, there are different options using different technologies. For example, a large display piece can be printed on a wide bubblejet printer, or the fixed background can be silkscreen printed and the variable text can be added on the wide bubblejet printer. Another example is that a booklet can be saddle stitched, perfect bound, or spiral bound.

In an ideal case, a print shop floor can be designed in a way to support such reconfigurable fulfillment workflows, as shown in this drawing (omitted here are the buffer storage areas).

However, this would not be commercially viable. In manufacturing, the main cost item is labor. Different fulfillment steps require different skill levels (at different salary levels) and take a different amount of time.

Additionally, not all print jobs are fulfilled at the same speed. A rush job is much more profitable than a job with a flexible delivery time. To stay in business, the plant manager must be able to accommodate as many rush jobs as possible.

This planning job is similar to that of scheduling trains in a saturated network like the New Railway Link through the Alps (NRLA or NEAT for Neue Eisenbahn-Alpentransversale, or simply AlpTransit). For freight, it connects the ports of Genoa and Rotterdam, with an additional interchange in Basle (the Rotterdam–Basel–Genoa corridor). For passengers, it connects Milan to Zurich and beyond. There are two separate basis tunnel systems: the older Lötschberg axis and the newer Gotthard axis. In the latter, freight trains travel at least at 100 km/h and passenger trains at least at 200 km/h. These are the operational speeds, the maximal speed is 249 km/h, limited by the available power supply.

The trains are mostly of the freight type, traveling at the lower operational speed. A smaller number of passenger trains travels at twice the operational speed. A freight train can be a little late, but a passenger train must always be on time lest people miss their connections, which is not acceptable.

The trains must be scheduled so that passenger trains can pass freight trains when these are at a station and can move to a bypass rail. However, there can be unforeseen events like accidents, natural disasters, and strikes that can delay the trains on their route. The manager will counter these problems by accelerating trains already in the system above their operational speed when there is sufficient electric power to do so. This way, when the problem is solved, there is additional capacity available.

The problem with modifying the schedule is that one cannot just accelerate trains: new bypass stations have to be determined and the travel speeds have to be fine-tuned. Train systems are an early implementation of industry 4.0 because the trains also automatically communicate between each other to avoid collisions and to optimize rail usage. For AlpTransit this required solving the political problem of forcing all European countries to adopt a new ERTMS/ETCS (European Train Control System) Level 2, to which older locomotives cannot be upgraded.

The regular jobs and rush jobs in a print fulfillment plant are similar. The big difference is that the train schedule is the same every day, while in printing each day is completely different. The job of the plant designer is to predict the bottlenecks and do a cost analysis to alleviate these bottlenecks. In particular, deadlocks have to be identified and mitigated. There are two main parameters: the number and speed of equipment, and the amount of buffer space. Buffering is highly nonlinear and cannot be estimated by eye or from experience. The only solution is to build a model and then simulate it.

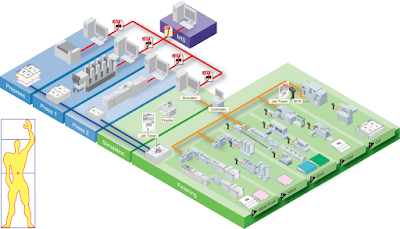

We used Ptolemy II for the simulation framework and wrote Java actors for each manufacturing step. To find and mitigate the bottlenecks, but especially to find the dreaded deadlock conditions, we just need to code timing information in the Java actors and run Montecarlo simulations.

We used a compute cluster with the data encrypted at rest and using Ganymed SSH-2 for authentication with certificates and encryption on the wire. Each actor could run on a separate machine in the cluster. The system allows the well-dimensioned design of the plant, its enhancement through modernization and expansion, and the daily scheduling.

So far, the optimization is just based on time. In a print fulfillment plant, there are also frequent mistakes in the workflow definition. The workflow for a job is stored in a so-called ticket (believe me, reaching a consensus standard was more difficult than for ERTMS/ETCS). One of the highest costs in a plant is an error in the ticket, which causes the job to be repeated after the ticket has been amended. With this, risk mitigation through ticket verification is a highly valuable function, because it allows a considerable cost reduction for not having to allocate insurance expenses.

While in office printing A4 or letter size paper are the norm, commercial printers use a possibly large paper size to save printing time and, with it, cost. This means there are ganging and imposition, folding, rotating, cutting, etc. It is easy to make an imposition mistake and pages end up in the wrong document or at the wrong place. Similarly, paper can be cut at the wrong point in the process or folded incorrectly.

Once we have a simulation of the print fulfillment factory, we can easily solve these workflow problems, thus reducing risk and with it insurance cost. The data for print jobs is stored in portable document format (PDF) files. For each workstation in a print fulfillment plant, we take the Java actor implemented for the simulation and add input and output ports for PDF files. We then implement a PDF transformer for each workstation that applies the work step to the PDFs. There can be multiple input and output PDFs. For example, a ganging workstation takes several PDF files and outputs a new PDF file for each imposed sheet.

Most errors happen when a ticket is compiled. After simulating the workflow, the operator simply checks the resulting PDF files. A mistake is immediately visible and can be diagnosed by looking at the intermediate PDF files. A more subtle error source is when workstations negotiate workflow changes in the sense of the industry 4.0 technology. Before the change can be approved, the workflow has to be simulated again and the difference between the two output PDF files has to be computed.

A more valuable, but also more complex workflow change is accommodating rush jobs by taking shortcuts. For example, if there is a spot color in the press, can we reuse the same color or do we have to clean out the machine to change or remove the spot color? Another example is the question of using dithering instead of stochastic halftoning to expedite a job. Finally, earlier we mentioned the possibility of running a fixed background through a silkscreen press and printing only the variable contents on a bubblejet press.

In a conventional setting, any such change requires doing a new press-check and having the customer come in to approve it. In practice, this is not always realistic and the owner will use his best judgment so self-approve the proof.

9,355,339 automates this check and approval. The ICC profiles are available and can be used to compute the perceived colors in each case. The transposition score for the color scale (there can be more than one) can predict the customer's approval of the press-check.

Thus, we have created a Java actor that simulates a human and can predict the human's perception. This is an industry 4.0 application where we not only have semantic models for the machines but also for the humans, and the machines can take the humans into consideration.