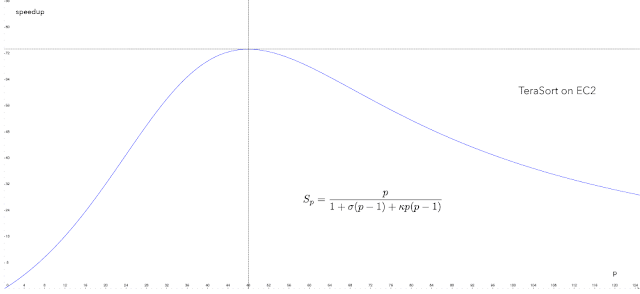

In the post on big data we mentioned Gunther's universal scalability model

In this model, p is the number of processors or clusters nodes and Sp is the speedup with p nodes. σ and κ represent the degree of contention in the system, respectively the lack of coherency in the distributed data. An example for the contention is waiting for message queueing (bottleneck saturation) and an example for incoherency is updating the processor caches (non-local data exchange).

When we do measurements, Tp is the runtime on p nodes, and Sp = T1 / Tp is the speedup with p nodes. When we have enough data, we can estimate σ and κ for our system and dataset using nonlinear statistical regression.

The model makes it easy to understand the difference between scale-up and scale-out architectures. In a scale-up system, you can increase the speedup by optimizing the contention, for example by adding memory or by bonding network ports. When you play with σ, you will learn that you can increase the speedup, but not the number of processors where you have the maximum speedup, which remains at 48 nodes in the example in Gunther's pape.

In a scale-out architecture, you play with κ and you learn that you can additionally move the maximum over the number of nodes. In Gunther's paper, they can move the maximum to 95 nodes by optimizing the system to exchange fewer data.

This shows that scale-up and scale-out are not simply about using faster system component vs. using more components in parallel. In both cases, you have a plurality of nodes, but you optimize the system differently. In scale-up, you find bottlenecks and then mitigate them. In scale-out, you also work on the algorithms to reduce data exchange.

Since the incoherency term is quadratic, you get more bang for the bucks by reducing the coherency workload. This leads to adding more nodes instead of increasing the performance of the nodes, the latter usually being a much more expensive proposition.

In big data, scale-out or horizontal scaling is the key approach to achieve scalability. While this is obvious to anybody who has done GP-GPU programming, it is less so for those who are just experienced in monolithic apps.

No comments:

Post a Comment